](https://gitlab.pm/rune/oai/src/branch/main/README.md)

+**Export to File:**

+```

+/export md notes.md

+/export json backup.json

+/export html report.html

+```

+**View Session Stats:**

+```

+/stats

+/credits

+```

+

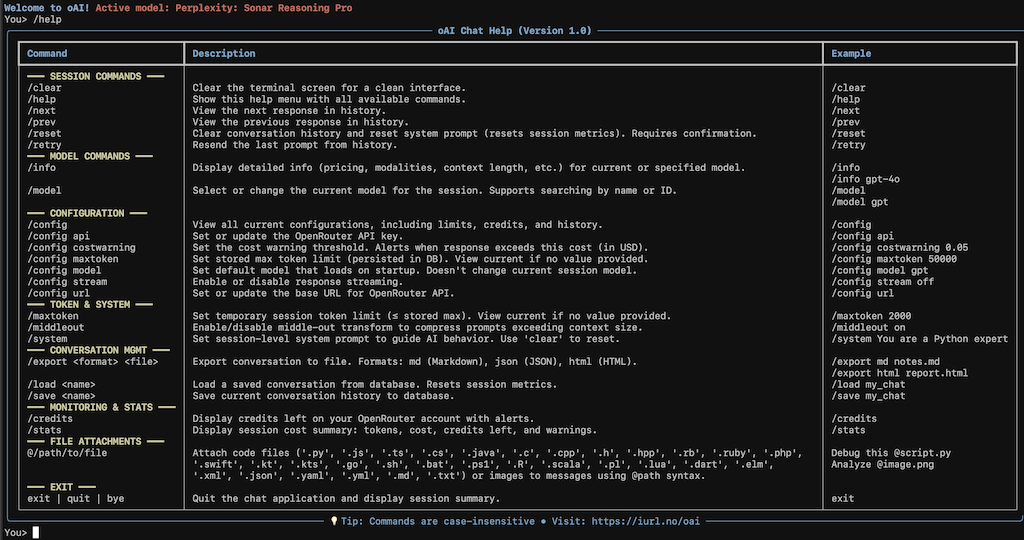

+## Command Reference

+

+Use `/help` within the application for a complete command reference organized by category:

+- Session Commands

+- Model Commands

+- Configuration

+- Token & System

+- Conversation Management

+- Monitoring & Stats

+- File Attachments

+

+## Configuration Options

+

+- API Key: `/config api`

+- Base URL: `/config url`

+- Streaming: `/config stream on|off`

+- Default Model: `/config model`

+- Cost Warning: `/config costwarning

](https://gitlab.pm/rune/oai/src/branch/main/README.md)

+**Export to File:**

+```

+/export md notes.md

+/export json backup.json

+/export html report.html

+```

+**View Session Stats:**

+```

+/stats

+/credits

+```

+

+## Command Reference

+

+Use `/help` within the application for a complete command reference organized by category:

+- Session Commands

+- Model Commands

+- Configuration

+- Token & System

+- Conversation Management

+- Monitoring & Stats

+- File Attachments

+

+## Configuration Options

+

+- API Key: `/config api`

+- Base URL: `/config url`

+- Streaming: `/config stream on|off`

+- Default Model: `/config model`

+- Cost Warning: `/config costwarning Generated by oAI Chat • https://iurl.no/oai

", + "