](https://gitlab.pm/rune/oai/src/branch/main/README.md)

-

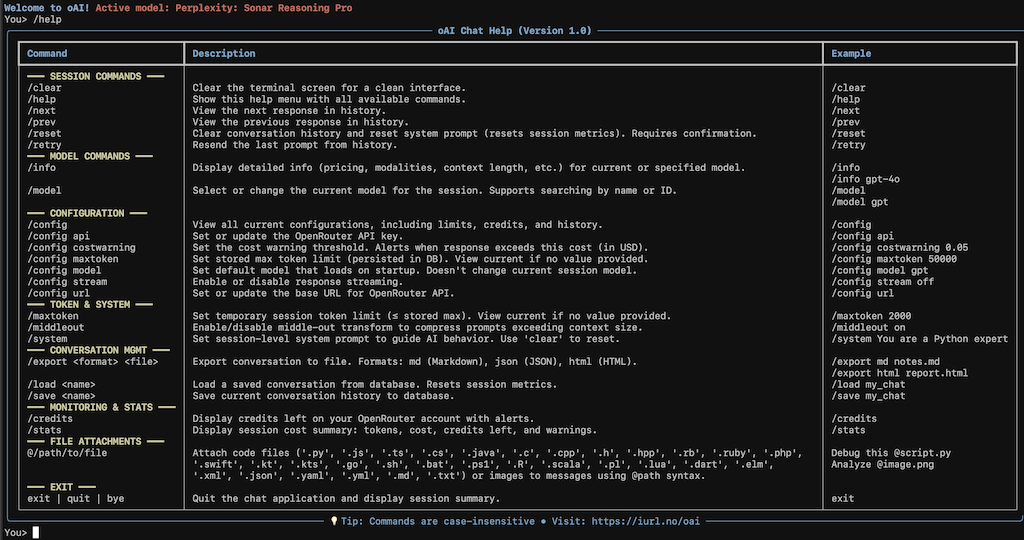

-*Screenshot from version 1.0 - MCP interface shows mode indicators like `[🔧 MCP: Files]` or `[🗄️ MCP: DB #1]`*

+- Python 3.10-3.13

+- OpenRouter API key ([get one here](https://openrouter.ai))

## Installation

-### Option 1: From Source (Recommended for Development)

-

-#### 1. Install Dependencies

+### Option 1: Install from Source (Recommended)

```bash

-pip install -r requirements.txt

+# Clone the repository

+git clone https://gitlab.pm/rune/oai.git

+cd oai

+

+# Install with pip

+pip install -e .

```

-#### 2. Make Executable

+### Option 2: Pre-built Binary (macOS/Linux)

-```bash

-chmod +x oai.py

-```

-

-#### 3. Copy to PATH

-

-```bash

-# Option 1: System-wide (requires sudo)

-sudo cp oai.py /usr/local/bin/oai

-

-# Option 2: User-local (recommended)

-mkdir -p ~/.local/bin

-cp oai.py ~/.local/bin/oai

-

-# Add to PATH if needed (add to ~/.bashrc or ~/.zshrc)

-export PATH="$HOME/.local/bin:$PATH"

-```

-

-#### 4. Verify Installation

-

-```bash

-oai --version

-```

-

-### Option 2: Pre-built Binaries

-

-Download platform-specific binaries:

-- **macOS (Apple Silicon)**: `oai_vx.x.x_mac_arm64.zip`

-- **Linux (x86_64)**: `oai_vx.x.x-linux-x86_64.zip`

+Download from [Releases](https://gitlab.pm/rune/oai/releases):

+- **macOS (Apple Silicon)**: `oai_v2.1.0_mac_arm64.zip`

+- **Linux (x86_64)**: `oai_v2.1.0_linux_x86_64.zip`

```bash

# Extract and install

-unzip oai_vx.x.x_mac_arm64.zip # or `oai_vx.x.x-linux-x86_64.zip`

-chmod +x oai

-mkdir -p ~/.local/bin # Remember to add this to your path. Or just move to folder already in your $PATH

+unzip oai_v2.1.0_*.zip

+mkdir -p ~/.local/bin

mv oai ~/.local/bin/

+

+# macOS only: Remove quarantine and approve

+xattr -cr ~/.local/bin/oai

+# Then right-click oai in Finder → Open With → Terminal → Click "Open"

```

-

-### Alternative: Shell Alias

+### Add to PATH

```bash

-# Add to ~/.bashrc or ~/.zshrc

-alias oai='python3 /path/to/oai.py'

+# Add to ~/.zshrc or ~/.bashrc

+export PATH="$HOME/.local/bin:$PATH"

```

## Quick Start

-### First Run Setup

+```bash

+# Start the chat client

+oai chat

+

+# Or with options

+oai chat --model gpt-4o --mcp

+```

+

+On first run, you'll be prompted for your OpenRouter API key.

+

+### Basic Commands

```bash

-oai

+# In the chat interface:

+/model # Select AI model

+/help # Show all commands

+/mcp on # Enable file/database access

+/stats # View session statistics

+exit # Quit

```

-On first run, you'll be prompted to enter your OpenRouter API key.

+## MCP (Model Context Protocol)

-### Basic Usage

+MCP allows the AI to interact with your local files and databases.

+

+### File Access

```bash

-# Start chatting

-oai

+/mcp on # Enable MCP

+/mcp add ~/Projects # Grant access to folder

+/mcp list # View allowed folders

-# Select a model

-You> /model

-

-# Enable MCP for file access

-You> /mcp on

-You> /mcp add ~/Documents

-

-# Ask AI to help with files (read-only)

-[🔧 MCP: Files] You> List all Python files in Documents

-[🔧 MCP: Files] You> Read and explain main.py

-

-# Enable write mode to let AI modify files

-You> /mcp write on

-[🔧✍️ MCP: Files+Write] You> Create a new Python file with helper functions

-[🔧✍️ MCP: Files+Write] You> Refactor main.py to use async/await

-

-# Switch to database mode

-You> /mcp add db ~/myapp/data.db

-You> /mcp db 1

-[🗄️ MCP: DB #1] You> Show me all tables

-[🗄️ MCP: DB #1] You> Find all users created this month

-```

-

-## MCP Guide

-

-### File Mode (Default)

-

-**Setup:**

-```bash

-/mcp on # Start MCP server

-/mcp add ~/Projects # Grant access to folder

-/mcp add ~/Documents # Add another folder

-/mcp list # View all allowed folders

-```

-

-**Natural Language Usage:**

-```

+# Now ask the AI:

"List all Python files in Projects"

-"Read and explain config.yaml"

+"Read and explain main.py"

"Search for files containing 'TODO'"

-"What's in my Documents folder?"

```

-**Available Tools (Read-Only):**

-- `read_file` - Read complete file contents

-- `list_directory` - List files/folders (recursive optional)

-- `search_files` - Search by name or content

-

-**Available Tools (Write Mode - requires `/mcp write on`):**

-- `write_file` - Create new files or overwrite existing ones

-- `edit_file` - Find and replace text in existing files

-- `delete_file` - Delete files (always requires confirmation)

-- `create_directory` - Create directories

-- `move_file` - Move or rename files

-- `copy_file` - Copy files to new locations

-

-**Features:**

-- ✅ Automatic .gitignore filtering (read operations only)

-- ✅ Skips virtual environments (venv, node_modules)

-- ✅ Handles large files (auto-truncates >50KB)

-- ✅ Cross-platform (macOS, Linux, Windows via WSL)

-- ✅ Write mode OFF by default for safety

-- ✅ Delete operations require user confirmation with LLM's reason

-

-### Database Mode

-

-**Setup:**

-```bash

-/mcp add db ~/app/database.db # Add SQLite database

-/mcp db list # View all databases

-/mcp db 1 # Switch to database #1

-```

-

-**Natural Language Usage:**

-```

-"Show me all tables in this database"

-"Find records mentioning 'error'"

-"How many users registered last week?"

-"Get the schema for the orders table"

-"Show me the 10 most recent transactions"

-```

-

-**Available Tools:**

-- `inspect_database` - View schema, tables, columns, indexes

-- `search_database` - Full-text search across tables

-- `query_database` - Execute read-only SQL queries

-

-**Supported Queries:**

-- ✅ SELECT statements

-- ✅ JOINs (INNER, LEFT, RIGHT, FULL)

-- ✅ Subqueries

-- ✅ CTEs (Common Table Expressions)

-- ✅ Aggregations (COUNT, SUM, AVG, etc.)

-- ✅ WHERE, GROUP BY, HAVING, ORDER BY, LIMIT

-- ❌ INSERT/UPDATE/DELETE (blocked for safety)

-

### Write Mode

-**Enable Write Mode:**

```bash

-/mcp write on # Enable write mode (shows warning, requires confirmation)

+/mcp write on # Enable file modifications

+

+# AI can now:

+"Create a new file called utils.py"

+"Edit config.json and update the API URL"

+"Delete the old backup files" # Always asks for confirmation

```

-**Natural Language Usage:**

-```

-"Create a new Python file called utils.py with helper functions"

-"Edit main.py and replace the old API endpoint with the new one"

-"Delete the backup.old file" (will prompt for confirmation)

-"Create a directory called tests"

-"Move config.json to the config folder"

-```

-

-**Important:**

-- ⚠️ Write mode is **OFF by default** and resets each session

-- ⚠️ Delete operations **always** require user confirmation

-- ⚠️ All operations are limited to allowed MCP folders

-- ✅ Write operations ignore .gitignore (can write to any file in allowed folders)

-

-**Disable Write Mode:**

-```bash

-/mcp write off # Disable write mode (back to read-only)

-```

-

-### Mode Management

+### Database Mode

```bash

-/mcp status # Show current mode, write mode, stats, folders/databases

-/mcp files # Switch to file mode

-/mcp db

](https://gitlab.pm/rune/oai/src/branch/main/README.md)

-

-*Screenshot from version 1.0 - MCP interface shows mode indicators like `[🔧 MCP: Files]` or `[🗄️ MCP: DB #1]`*

+- Python 3.10-3.13

+- OpenRouter API key ([get one here](https://openrouter.ai))

## Installation

-### Option 1: From Source (Recommended for Development)

-

-#### 1. Install Dependencies

+### Option 1: Install from Source (Recommended)

```bash

-pip install -r requirements.txt

+# Clone the repository

+git clone https://gitlab.pm/rune/oai.git

+cd oai

+

+# Install with pip

+pip install -e .

```

-#### 2. Make Executable

+### Option 2: Pre-built Binary (macOS/Linux)

-```bash

-chmod +x oai.py

-```

-

-#### 3. Copy to PATH

-

-```bash

-# Option 1: System-wide (requires sudo)

-sudo cp oai.py /usr/local/bin/oai

-

-# Option 2: User-local (recommended)

-mkdir -p ~/.local/bin

-cp oai.py ~/.local/bin/oai

-

-# Add to PATH if needed (add to ~/.bashrc or ~/.zshrc)

-export PATH="$HOME/.local/bin:$PATH"

-```

-

-#### 4. Verify Installation

-

-```bash

-oai --version

-```

-

-### Option 2: Pre-built Binaries

-

-Download platform-specific binaries:

-- **macOS (Apple Silicon)**: `oai_vx.x.x_mac_arm64.zip`

-- **Linux (x86_64)**: `oai_vx.x.x-linux-x86_64.zip`

+Download from [Releases](https://gitlab.pm/rune/oai/releases):

+- **macOS (Apple Silicon)**: `oai_v2.1.0_mac_arm64.zip`

+- **Linux (x86_64)**: `oai_v2.1.0_linux_x86_64.zip`

```bash

# Extract and install

-unzip oai_vx.x.x_mac_arm64.zip # or `oai_vx.x.x-linux-x86_64.zip`

-chmod +x oai

-mkdir -p ~/.local/bin # Remember to add this to your path. Or just move to folder already in your $PATH

+unzip oai_v2.1.0_*.zip

+mkdir -p ~/.local/bin

mv oai ~/.local/bin/

+

+# macOS only: Remove quarantine and approve

+xattr -cr ~/.local/bin/oai

+# Then right-click oai in Finder → Open With → Terminal → Click "Open"

```

-

-### Alternative: Shell Alias

+### Add to PATH

```bash

-# Add to ~/.bashrc or ~/.zshrc

-alias oai='python3 /path/to/oai.py'

+# Add to ~/.zshrc or ~/.bashrc

+export PATH="$HOME/.local/bin:$PATH"

```

## Quick Start

-### First Run Setup

+```bash

+# Start the chat client

+oai chat

+

+# Or with options

+oai chat --model gpt-4o --mcp

+```

+

+On first run, you'll be prompted for your OpenRouter API key.

+

+### Basic Commands

```bash

-oai

+# In the chat interface:

+/model # Select AI model

+/help # Show all commands

+/mcp on # Enable file/database access

+/stats # View session statistics

+exit # Quit

```

-On first run, you'll be prompted to enter your OpenRouter API key.

+## MCP (Model Context Protocol)

-### Basic Usage

+MCP allows the AI to interact with your local files and databases.

+

+### File Access

```bash

-# Start chatting

-oai

+/mcp on # Enable MCP

+/mcp add ~/Projects # Grant access to folder

+/mcp list # View allowed folders

-# Select a model

-You> /model

-

-# Enable MCP for file access

-You> /mcp on

-You> /mcp add ~/Documents

-

-# Ask AI to help with files (read-only)

-[🔧 MCP: Files] You> List all Python files in Documents

-[🔧 MCP: Files] You> Read and explain main.py

-

-# Enable write mode to let AI modify files

-You> /mcp write on

-[🔧✍️ MCP: Files+Write] You> Create a new Python file with helper functions

-[🔧✍️ MCP: Files+Write] You> Refactor main.py to use async/await

-

-# Switch to database mode

-You> /mcp add db ~/myapp/data.db

-You> /mcp db 1

-[🗄️ MCP: DB #1] You> Show me all tables

-[🗄️ MCP: DB #1] You> Find all users created this month

-```

-

-## MCP Guide

-

-### File Mode (Default)

-

-**Setup:**

-```bash

-/mcp on # Start MCP server

-/mcp add ~/Projects # Grant access to folder

-/mcp add ~/Documents # Add another folder

-/mcp list # View all allowed folders

-```

-

-**Natural Language Usage:**

-```

+# Now ask the AI:

"List all Python files in Projects"

-"Read and explain config.yaml"

+"Read and explain main.py"

"Search for files containing 'TODO'"

-"What's in my Documents folder?"

```

-**Available Tools (Read-Only):**

-- `read_file` - Read complete file contents

-- `list_directory` - List files/folders (recursive optional)

-- `search_files` - Search by name or content

-

-**Available Tools (Write Mode - requires `/mcp write on`):**

-- `write_file` - Create new files or overwrite existing ones

-- `edit_file` - Find and replace text in existing files

-- `delete_file` - Delete files (always requires confirmation)

-- `create_directory` - Create directories

-- `move_file` - Move or rename files

-- `copy_file` - Copy files to new locations

-

-**Features:**

-- ✅ Automatic .gitignore filtering (read operations only)

-- ✅ Skips virtual environments (venv, node_modules)

-- ✅ Handles large files (auto-truncates >50KB)

-- ✅ Cross-platform (macOS, Linux, Windows via WSL)

-- ✅ Write mode OFF by default for safety

-- ✅ Delete operations require user confirmation with LLM's reason

-

-### Database Mode

-

-**Setup:**

-```bash

-/mcp add db ~/app/database.db # Add SQLite database

-/mcp db list # View all databases

-/mcp db 1 # Switch to database #1

-```

-

-**Natural Language Usage:**

-```

-"Show me all tables in this database"

-"Find records mentioning 'error'"

-"How many users registered last week?"

-"Get the schema for the orders table"

-"Show me the 10 most recent transactions"

-```

-

-**Available Tools:**

-- `inspect_database` - View schema, tables, columns, indexes

-- `search_database` - Full-text search across tables

-- `query_database` - Execute read-only SQL queries

-

-**Supported Queries:**

-- ✅ SELECT statements

-- ✅ JOINs (INNER, LEFT, RIGHT, FULL)

-- ✅ Subqueries

-- ✅ CTEs (Common Table Expressions)

-- ✅ Aggregations (COUNT, SUM, AVG, etc.)

-- ✅ WHERE, GROUP BY, HAVING, ORDER BY, LIMIT

-- ❌ INSERT/UPDATE/DELETE (blocked for safety)

-

### Write Mode

-**Enable Write Mode:**

```bash

-/mcp write on # Enable write mode (shows warning, requires confirmation)

+/mcp write on # Enable file modifications

+

+# AI can now:

+"Create a new file called utils.py"

+"Edit config.json and update the API URL"

+"Delete the old backup files" # Always asks for confirmation

```

-**Natural Language Usage:**

-```

-"Create a new Python file called utils.py with helper functions"

-"Edit main.py and replace the old API endpoint with the new one"

-"Delete the backup.old file" (will prompt for confirmation)

-"Create a directory called tests"

-"Move config.json to the config folder"

-```

-

-**Important:**

-- ⚠️ Write mode is **OFF by default** and resets each session

-- ⚠️ Delete operations **always** require user confirmation

-- ⚠️ All operations are limited to allowed MCP folders

-- ✅ Write operations ignore .gitignore (can write to any file in allowed folders)

-

-**Disable Write Mode:**

-```bash

-/mcp write off # Disable write mode (back to read-only)

-```

-

-### Mode Management

+### Database Mode

```bash

-/mcp status # Show current mode, write mode, stats, folders/databases

-/mcp files # Switch to file mode

-/mcp db Generated by oAI Chat • https://iurl.no/oai

", - "